In Part 1, Part 2, Part 3, and Part 4, I covered the legal basis, backup strategy, policy implementation, locking the recovery points stored in the vault, applying vault policy, legal holds, and audit manager to monitor the backup and generate automated reports.

In this part, I will explore two essential topics that are also DORA requirements: restore testing and Monitoring and alarming.

Restore Testing

Restore testing was announced late last year on Nov 27, 2024. It is extremely useful and one component that can ease the operational overhead of backups.

…[Restore Testing] helps perform automated and periodic restore tests of supported AWS resources that have been backed up…customers can test recovery readiness to prepare for possible data loss events and to measure duration times for restore jobs to satisfy compliance or regulatory requirements.

Doesn’t that sound amazing? You can practically automate the health check of the backups, including snapshots and continuous recovery points, and ensure they are restorable. Furthermore, you can indeed have a record of restore duration and, based on that, provide the policies and procedures submitted to auditors that are 100% aligned with the reality of the infrastructure.

By using restore testing, you can use the Audit Manager feature to generate compliance reports on the restoration of recovery points.

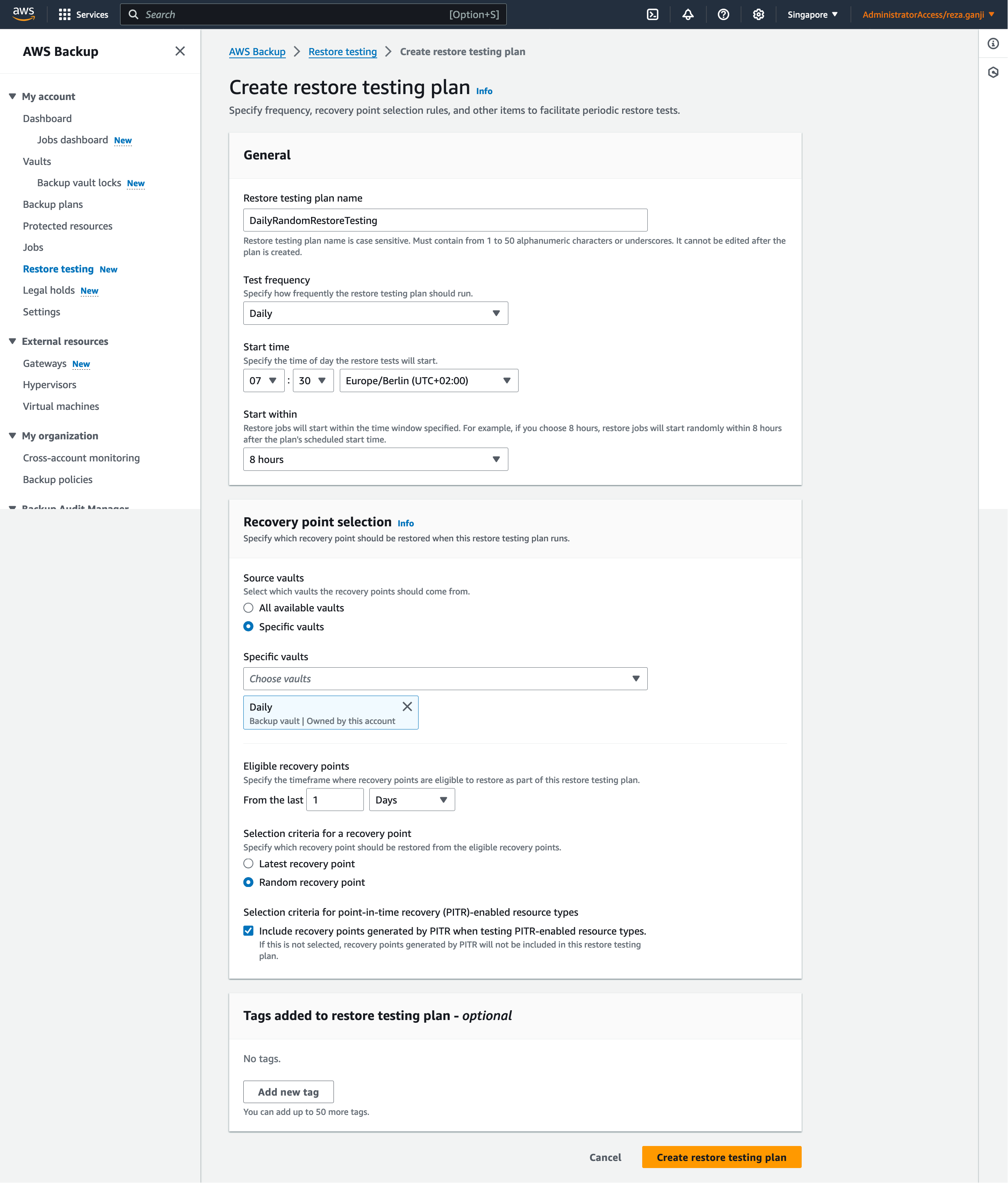

To get started with restore testing, go to the Backup console, and from the navigation sidebar, click on Restore Testing. Then click on “Create restore testing plan”.

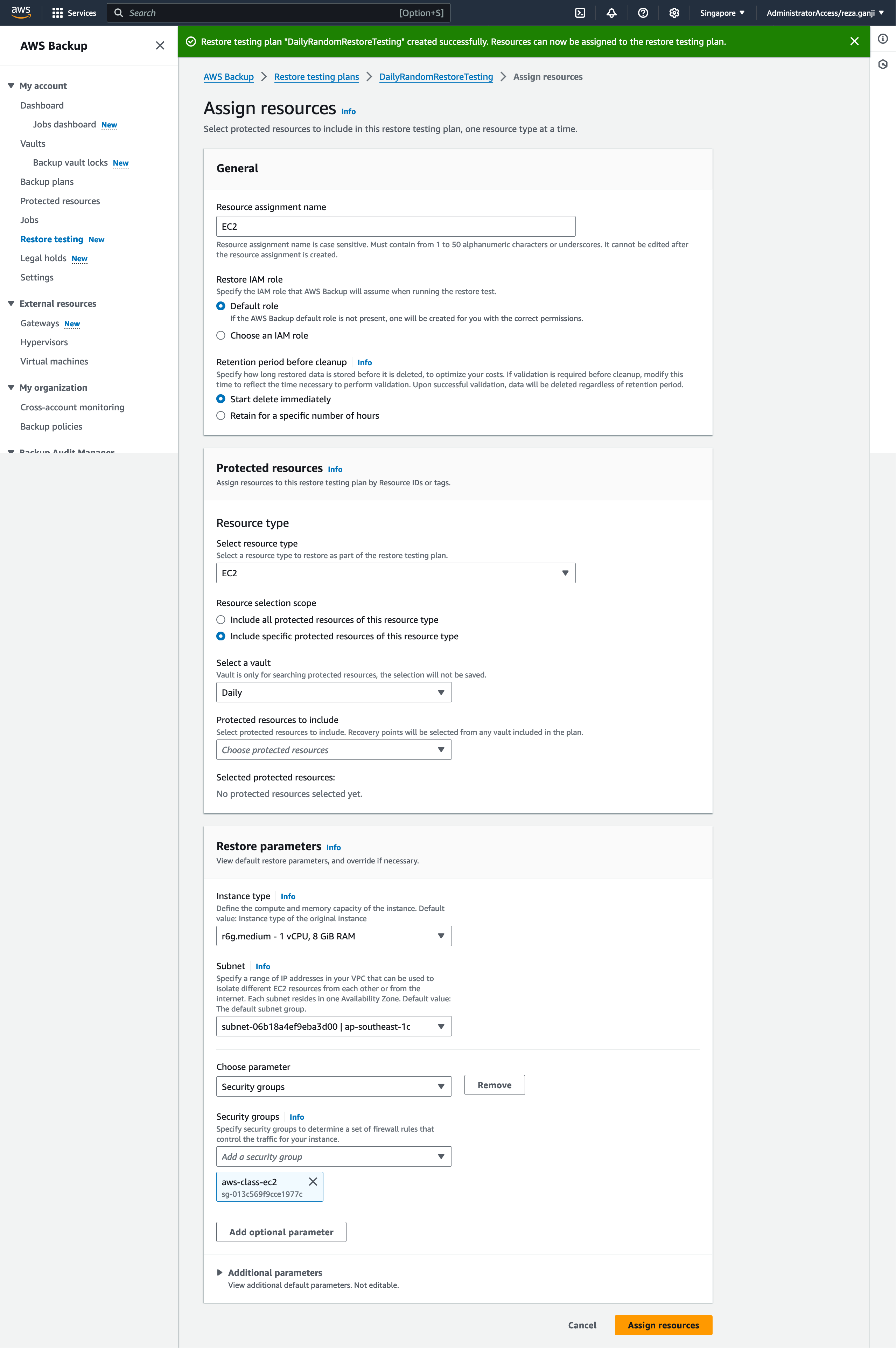

Once the restore plan is created, you will be redirected to the resource selection page. One important note is that each resource type would require specific metadata to allow AWS Backup to restore the resource correctly.

Important:

AWS Backup can infer that a resource should be restored to the default setting, such as an Amazon EC2 instance or Amazon RDS cluster restored to the default VPC. However, if the default is not present, for example the default VPC or subnet has been deleted and no metadata override has been input, the restore will not be successful.

| Resource type | Inferred restore metadata keys and values | Overridable metadata |

|---|---|---|

| DynamoDB | deletionProtection, where value is set to false encryptionType is set to Default targetTableName, where value is set to random value starting with awsbackup-restore-test- | encryptionType kmsMasterKeyArn |

| Amazon EBS | availabilityZone, whose value is set to a random availability zone encrypted, whose value is set to true | availabilityZone kmsKeyId |

| Amazon EC2 | disableApiTermination value is set to false instanceType value is set to the instanceType of the recovery point being restored requiredImdsV2 value is set to true | iamInstanceProfileName value can be null or UseBackedUpValue instanceType requireImdsV2 securityGroupIds subnetId |

| Amazon EFS | encrypted value is set to true file-system-id value is set to the file system ID of the recovery point being restored kmsKeyId value is set to alias/aws/elasticfilesystem newFileSystem value is set to true performanceMode value is set to generalPurpose | kmsKeyId |

| Amazon FSx for Lustre | lustreConfiguration has nested keys. One nested key is automaticBackupRetentionDays, the value of which is set to 0 | kmsKeyId lustreConfiguration has nested key logConfiguration securityGroupIds subnetIds, required for successful restore |

| Amazon FSx for NetApp ONTAP | name is set to a random value starting with awsbackup_restore_test_ ontapConfiguration has nested keys, including: junctionPath where /name is the name of the volume being restored sizeInMegabytes, the value of which is set to the size in megabytes of the recovery point being restored snapshotPolicy where the value is set to none | ontapConfiguration has specific overrideable nested keys, including: junctionPath ontapVolumeType securityStyle sizeInMegabytes storageEfficiencyEnabled storageVirtualMachineId, required for successful restore tieringPolicy |

| Amazon FSx for OpenZFS | openZfzConfiguration, which has nested keys, including: automaticBackupRetentionDays with value set to 0 deploymentType with value set to the deployment type of the recovery point being restored throughputCapacity, whose value is based on the deploymentType. If deploymentType is SINGLE_AZ_1, the value is set to 64; if the deploymentType is SINGLE_AZ_2 or MULTI_AZ_1, the value is set to 160 | kmsKeyId openZfsConfiguration has specific overridable nested keys, including: deploymentType throughputCapacity diskiopsConfiguration securityGroupIds subnetIds |

| Amazon FSx for Windows File Server | windowsConfiguration, which has nested keys including: automaticBackupRetentionDays with value set to 0 deploymentType with value set to the deployment type of the recovery point being restored throughputCapacity with value set to 8 | kmsKeyId securityGroupIds subnetIds required for successful restore windowsConfiguration, with specific overridable nested keys throughputCapacity activeDirectoryId required for successful restore preferredSubnetId |

| Amazon RDS, Aurora, Amazon DocumentDB, Amazon Neptune clusters | availabilityZones with value set to a list of up to three random availability zones dbClusterIdentifier with a random value starting with awsbackup-restore-test engine with value set to the engine of the recovery point being restored | availabilityZones databaseName dbClusterParameterGroupName dbSubnetGroupName enableCloudwatchLogsExports enableIamDatabaseAuthentication engine engineMode engineVersion kmskeyId port optionGroupName scalingConfiguration vpcSecurityGroupIds |

| Amazon RDS instances | dbInstanceIdentifier with a random value starting with awsbackup-restore-test- deletionProtection with value set to false multiAz with value set to false publiclyAccessible with value set to false | allocatedStorage availabilityZones dbInstanceClass dbName dbParameterGroupName dbSubnetGroupName domain domainIamRoleName enableCloudwatchLogsExports enableIamDatabaseAuthentication iops licensemodel multiAz optionGroupName port processorFeatures publiclyAccessible storageType vpcSecurityGroupIds |

| Amazon Simple Storage Service (Amazon S3) | destinationBucketName with a random value starting with awsbackup-restore-test- encrypted with value set to true encryptionType with value set to SSE-S3 newBucket with value set to true | encryptionType kmsKey |

Note that Restore Testing is account specific and cannot be configured at the organization level yet. This would mean, you will need to apply this configurations to all accounts across the organization or to all accounts that require automatic restore testing.

Let’s create a resource selection or assignment for the restore plan:

As you can see, based on the resource type that I have selected, I must provide specific configurations. In this case, I selected the resource type EC2 and I selected a subnet that I will be using for restore testing only which is isolated and does not interfere with my production environment and it does not have access to the internet both inbound and outbound.

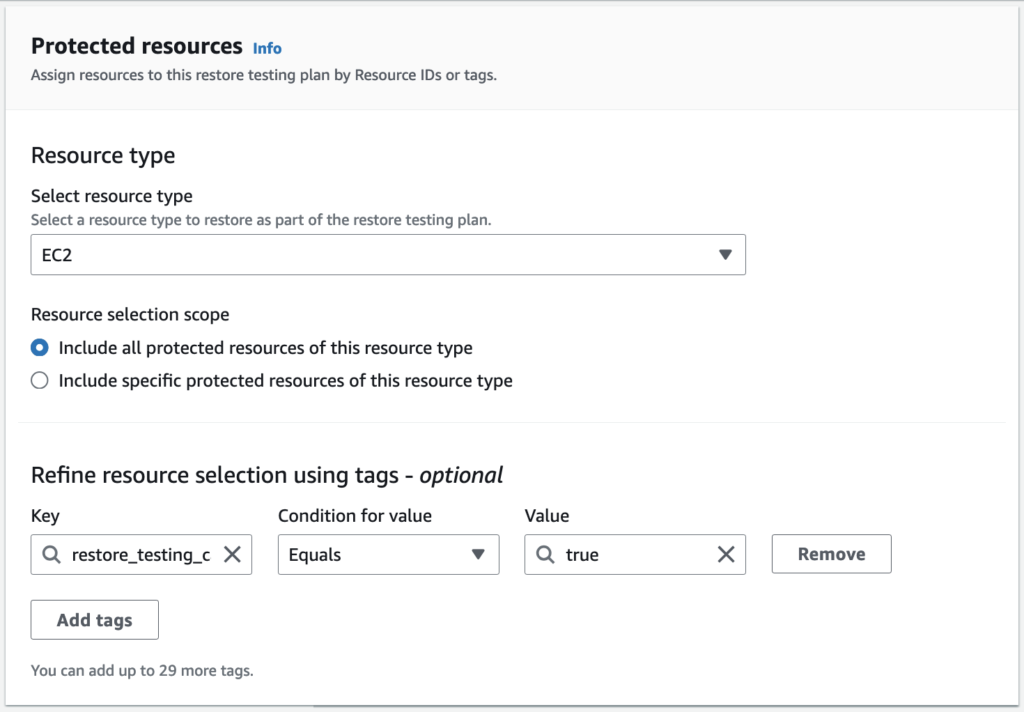

Optionally, you can always tag your resources based on their type for easier selection of resource types. In part 2, I created a tag called restore_testing_candidate = true to be used explicitly for this part. By having that tag, I know which resources within my infrastructure are meant to go through the audit and require a restore testing compliance report. By using the tag AWS Backup Restore Testing tag selection, I can only include the specific resources:

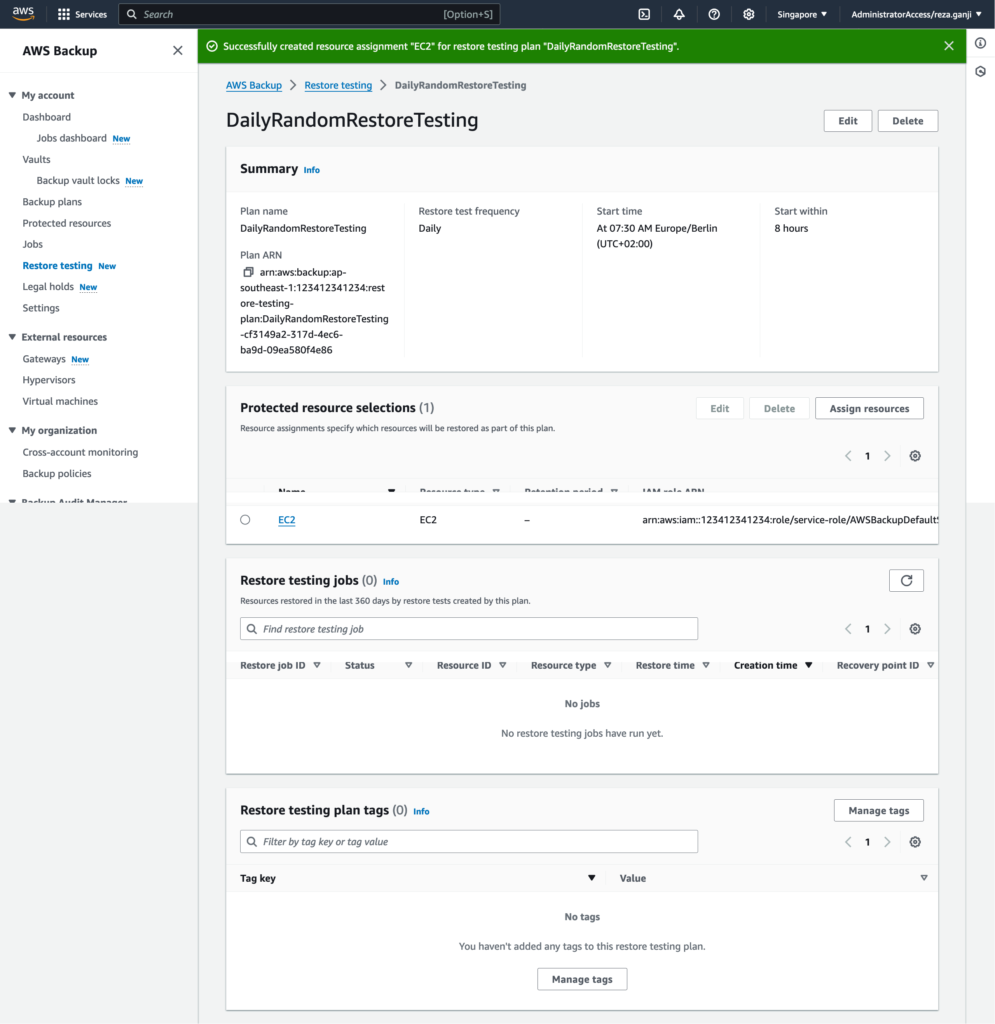

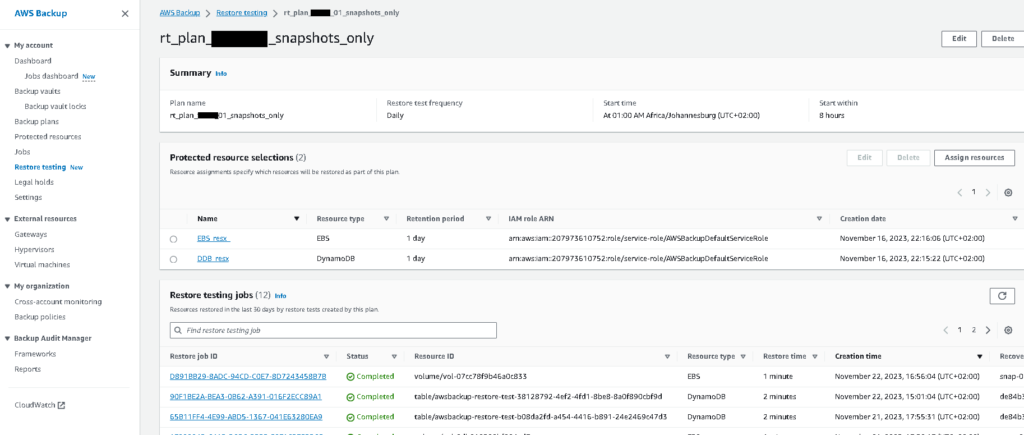

And finally, this is how my restore testing will look like:

I configured the restore testing jobs to start at 7:30 AM and start within 8 hours. During this period, monitor the EC2 quota if a large number of instances are being restored via restore testing. Watch out for the failure monitoring in the next section.

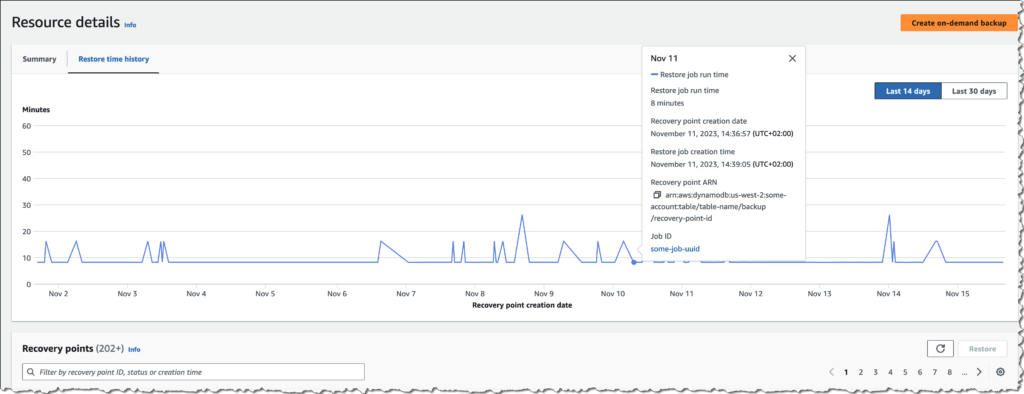

Once the restore testing jobs get executed, you will be able to view the job together with the history:

A few notes from experience:

- AWS Backup policy configured at the org level is limited to tags for resource selection.

- Do not enable the vault lock before you are 100% certain all the backup configurations are accurate.

- Read all the limitations and requirements carefully, particularly about the backup schedule and not including a single resource in two backup policies.

- Configure everything with IaC to ensure it can be reapplied and changed easily across the org.

Backup Monitoring

There are multiple ways to monitor the backup jobs, which I will go through them all:

- Cross-account monitoring

- Jobs Dashboard

- CloudWatch

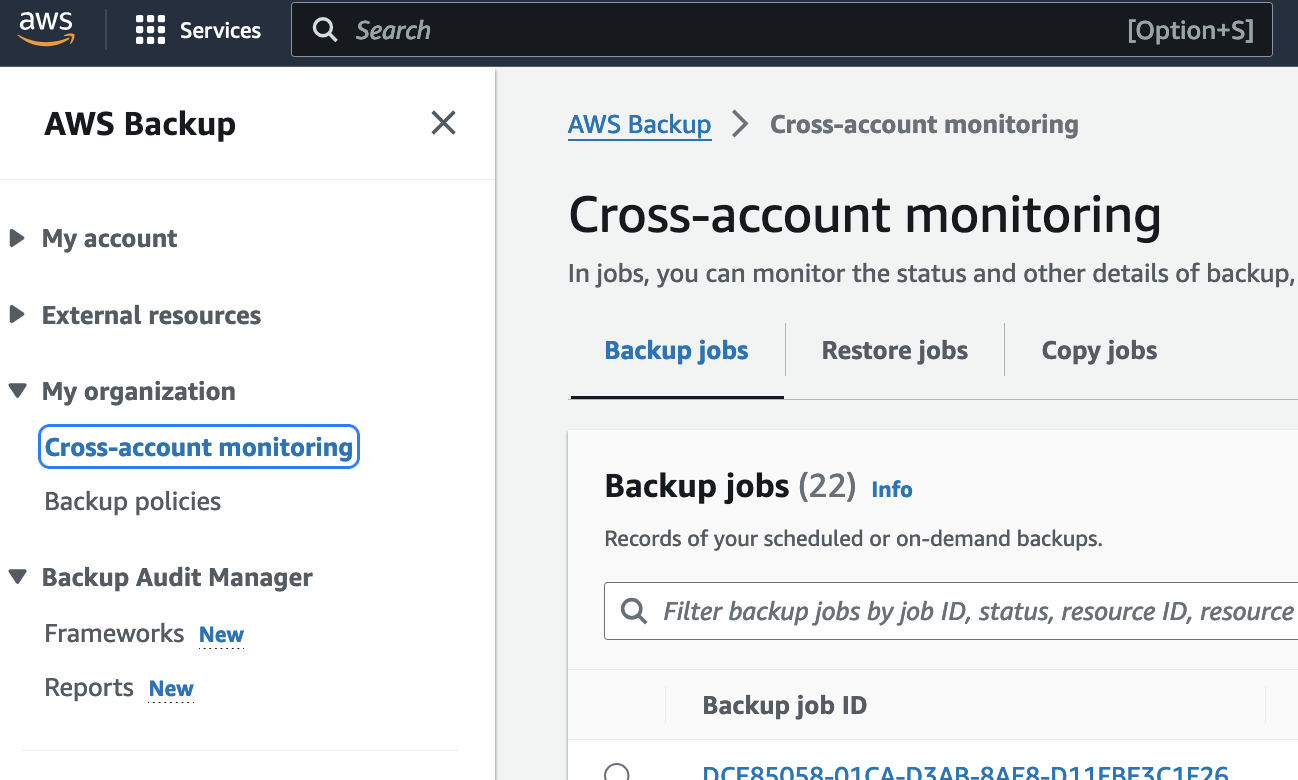

Cross-account monitoring

Cross-account monitoring provides the capability to monitor all backup, restore, and copy jobs across the organization from the root or backup delegated account. Jobs can be filtered by job ID, job status (failed, expired, etc.), resource type, message category (access denied, etc.), or account ID.

One of the biggest advantages is the centralized oversight it provides. Instead of having to log in to each AWS account separately to check backup jobs and policies, AWS Backup Cross-Account Monitoring gives me a unified view of metrics, job statuses, and overall resource coverage. This kind of visibility is a game-changer for keeping tabs on backup health and ensuring compliance across the board. It’s also incredibly useful for policy enforcement. I can define backup plans at an organizational level and apply them consistently across all accounts. This helps me sleep better at night, knowing that the data protection standards I’ve set up are being followed everywhere, not just in one account.

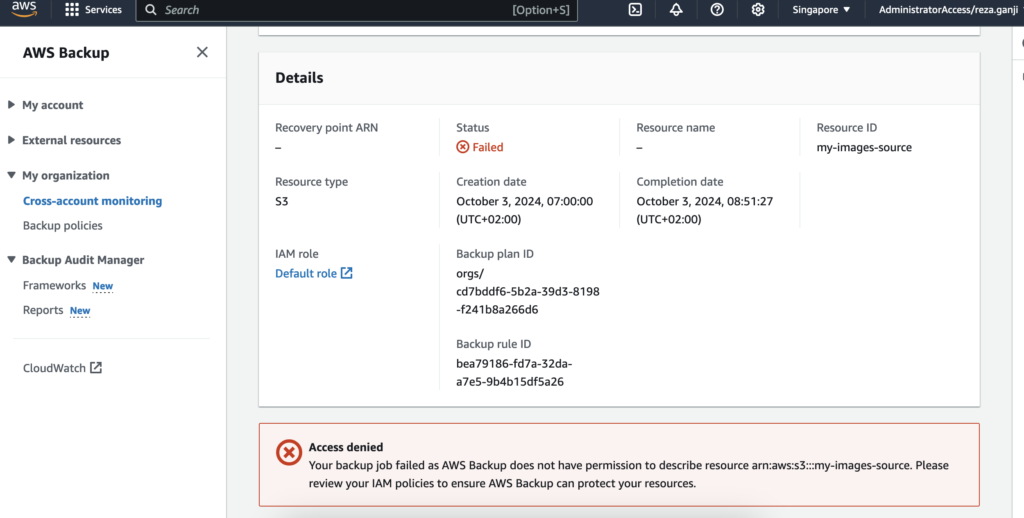

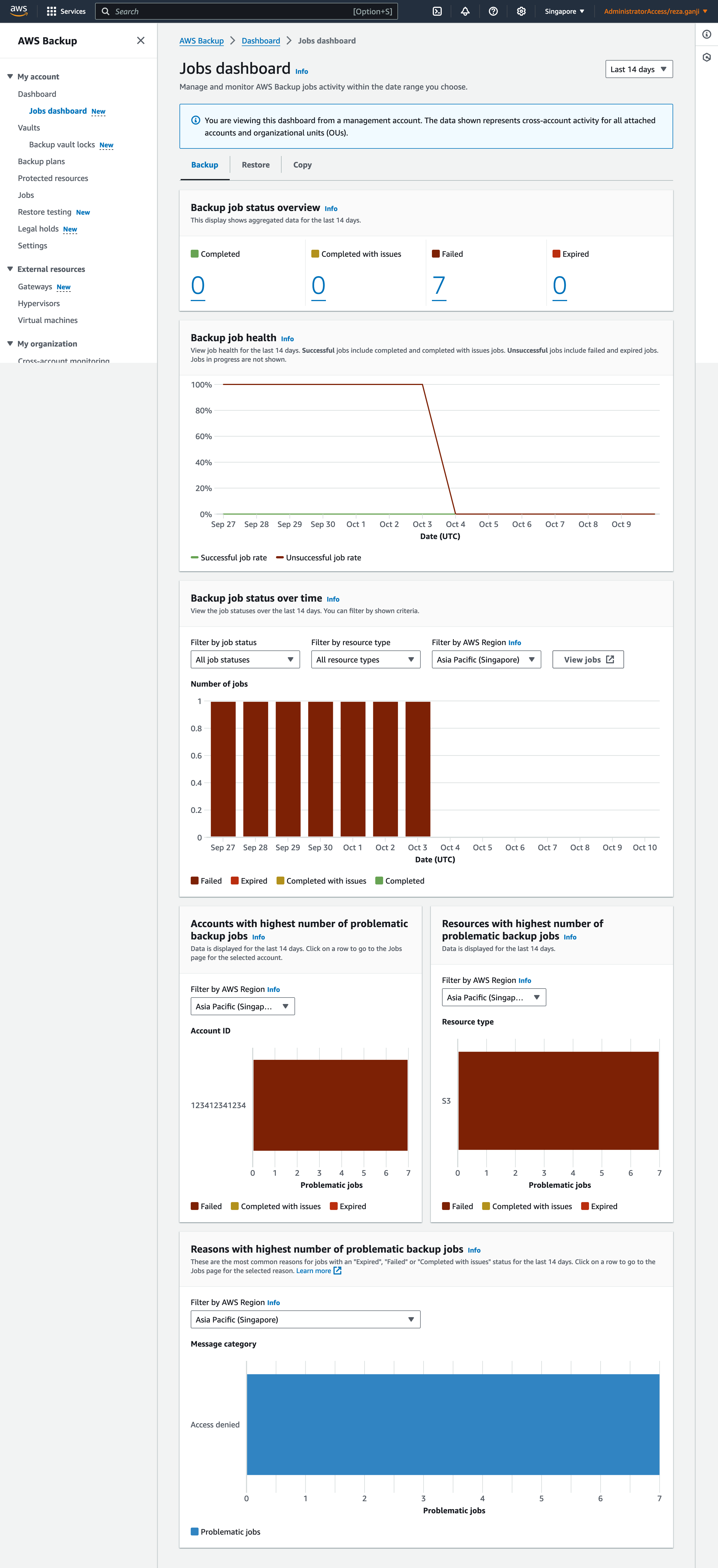

I have a failed job in my cross-account monitoring. Let’s have a quick look at it:

At the bottom of each failed backup job you will be able to see the reason that caused the job to fail. In this case, the role that was used by AWS Backup does not have sufficient privilege to access the S3 bucket.

AWS Backup Jobs Dashboard

AWS Backup Jobs Dashboard is another tool I often find myself using. It provides a clear and detailed view of backup and restore jobs, allowing me to track the progress of each task. But how does it differ from AWS Backup Cross-Account Monitoring? Let’s break it down. The AWS Backup Jobs Dashboard gives me a real-time overview of all the backup and restore activities happening within a single AWS account. This includes details like job status, success rates, and any errors that might come up. It’s essentially my go-to interface when I need to understand what’s happening with backups right now—whether jobs are running, succeeded, failed, or are still pending.

This dashboard helps me monitor individual jobs, troubleshoot any issues immediately, and ensure my backup schedules are running smoothly. It’s all about real-time monitoring and operational control within a particular account.

For me, the Backup Jobs Dashboard is where I go when I need to get into the weeds—troubleshoot specific issues, track individual jobs, and make quick fixes. Cross-Account Monitoring, however, is where I zoom out to ensure the broader strategy is in place and working smoothly across all of AWS.

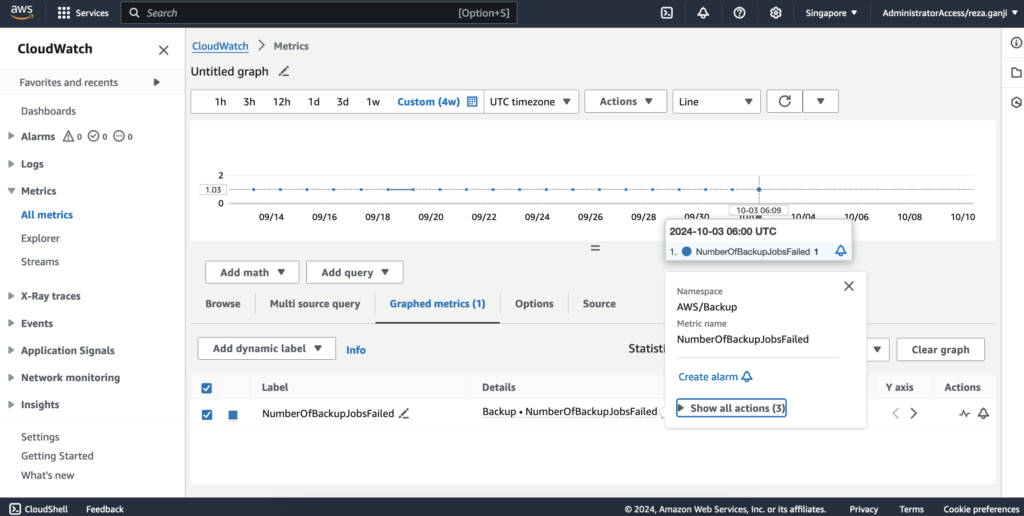

Backup Job Monitoring using CloudWatch

When managing backups, especially at scale, visibility is crucial. One of the tools that makes monitoring AWS Backup jobs more efficient is Amazon CloudWatch. By using CloudWatch with AWS Backup, I can set up a robust monitoring system that gives me real-time alerts and insights into my backup operations.

Amazon CloudWatch integrates seamlessly with AWS Backup to monitor all the activities of my backup jobs. With CloudWatch, I can collect metrics and set up alarms for different job statuses, like success, failure, or even pending states that take longer than expected. This means I don’t have to manually monitor the AWS Backup dashboard constantly—I can let CloudWatch handle that and notify me only when something needs my attention.

For example, if a critical backup fails, I can configure a CloudWatch Alarm to send me a notification via Amazon SNS (Simple Notification Service). That way, I can immediately jump in and resolve the issue. This level of automation helps keep my backup strategy proactive rather than reactive.

Another powerful aspect of using CloudWatch is automation with CloudWatch Events. I can create rules that trigger specific actions based on the state of a backup job. For example, if a backup job fails, CloudWatch can trigger an AWS Lambda function to retry the backup automatically or notify the relevant teams via Slack or email. This helps streamline the workflow and reduces the manual intervention needed to keep backups running smoothly.

The reason I like using CloudWatch with AWS Backup is simple—it’s all about proactive monitoring and automation. AWS Backup alone gives me good visibility, but when I integrate it with CloudWatch, I get the power of real-time alerts, customizable dashboards, and automated responses to backup events. This means fewer surprises, faster response times, and ultimately a more resilient backup strategy.

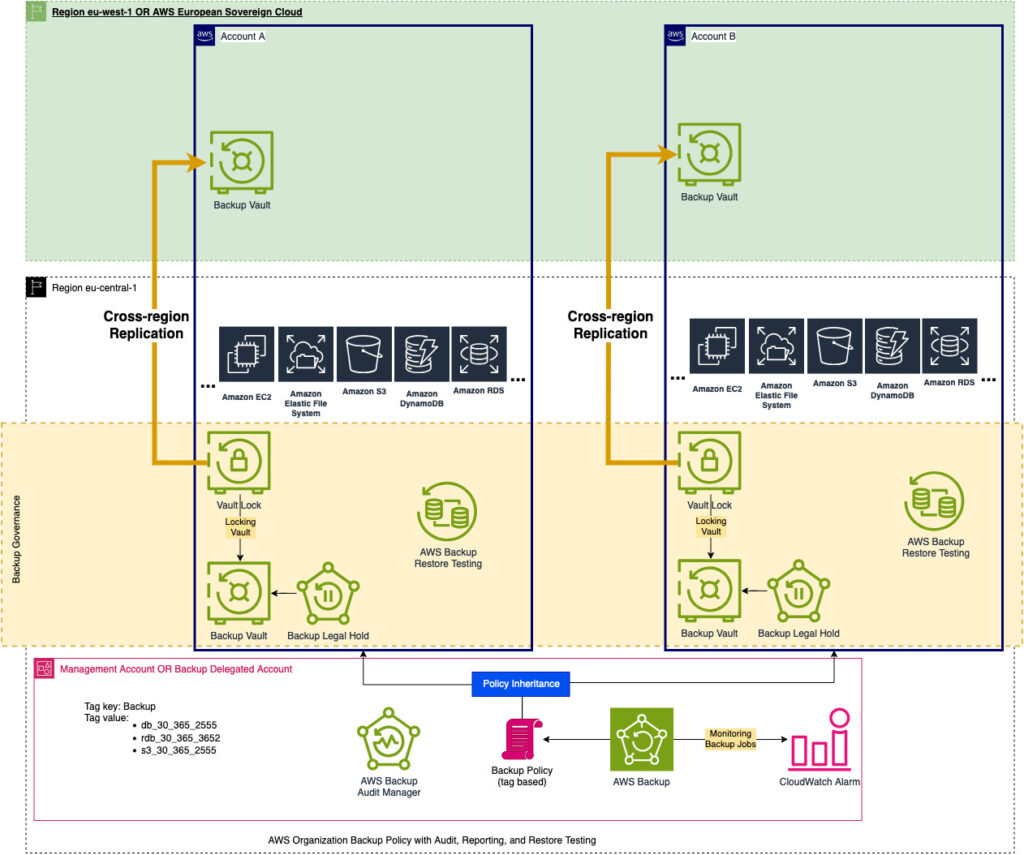

As a reminder, here is what the target architecture diagram looks like:

Closing Thoughts

Throughout this series, we have explored the comprehensive journey of achieving compliance with AWS Backup under the Digital Operational Resilience Act (DORA). We started by understanding the foundational requirements, from setting up backup strategies, retention policies, and compliance measures to implementing key AWS services such as Backup Vault, Vault Lock, Legal Holds, and Audit Manager. Each of these tools helps ensure that backup and restoration strategies not only meet regulatory standards but also provide operational resilience and scalability.

One of the highlights has been seeing how AWS Backup features, such as restore testing and automated compliance auditing, can reduce the manual effort and complexity associated with meeting DORA requirements. Restore testing allows us to perform automated health checks of our backups, ensuring recovery points are restorable and compliant without the need for manual intervention. Meanwhile, Audit Manager provides a powerful mechanism for generating and managing compliance reports that are crucial during audits.

Finally, monitoring and alarming using tools like AWS CloudWatch gives us proactive oversight of backup processes across accounts, ensuring that any failures or discrepancies are addressed promptly. With Cross-Account Monitoring, Jobs Dashboard, and CloudWatch integration, we can stay confident that our entire backup strategy remains operationally resilient and compliant.

Conclusion

In today’s evolving regulatory landscape, compliance and resilience are more important than ever—especially in the financial services industry, where data integrity and availability are critical. This series has emphasized not just the how but also the why behind building a robust backup strategy using AWS tools to meet DORA standards.

The digital financial landscape is only growing more complex, but by effectively leveraging AWS Backup services, we can ensure our cloud infrastructure remains resilient, compliant, and ready to handle any operational challenges that arise.

Thank you for joining me on this journey to master AWS Backup in the context of DORA compliance. I hope this series has provided you with the tools and insights needed to build a robust and scalable backup strategy for your organization.

End of Part 5 – Final Part!

Leave a Reply