When we architect the application, it is essential to consider the current metrics and monitoring logs to ensure its design is future-proof. But sometimes, we do not have the necessary logs to make the right decisions. In that case, we will let the application run in an architecture that we think seems optimized – using the metrics we had access to – and let it run for a while to capture logs required to apply necessary changes. In our case, the application has grown to the point where we could not expect it to happen!

The COVID-19 has increased the consumption of all online applications and systems in the organization, whether modern or legacy software.

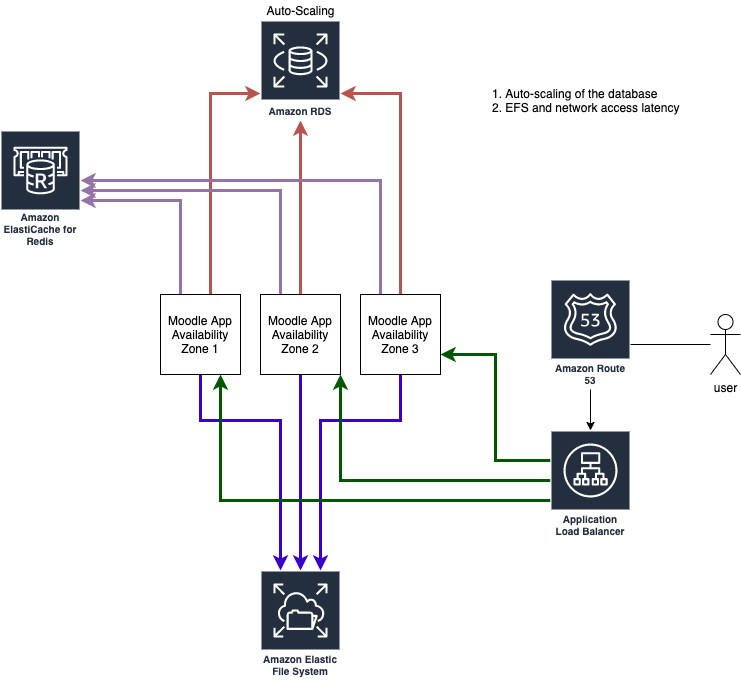

We have an application that is spanned across three availability zones with EFS mount points in each AZ retrieving data from and Amazon Aurora Serverless. In the past two weeks, we realized the application is getting slower and slower. Checking the EC2 and database activity logs did not help, and we learned something could be wrong with the storage. Unexpectedly, the issue was, in fact, caused by the EFS limitation for I/O on general-purpose performance mode.

In General Purpose performance mode, read and write operations consume a different number of file operations. Read data or metadata consumes one file operation. Write data or update metadata consumes five file operations. A file system can support up to 35,000 file operations per second. This might be 35,000 read operations, 7,000 write operations, or a combination of the two.

see Amazon EFS quotas and limits – Quotas for Amazon EFS file systems.

After creating an EFS file system, you cannot change the performance mode, and with having almost 2 TB of data in the file system, we were concerned about the downtime window. AWS suggests using AWS DataSync to migrate the data from either on-premises or any of the AWS storage offerings. Although DataSync could offer help to migrate the data, we already had AWS Backup configured. So, we used AWS Backup to take a complete snapshot of the EFS and restore it as a Max I/O file system.

Note that Max I/O performance mode offers a higher number of file system operations per second but has a slightly higher latency per each file system operation.